Stable Diffusion and AI generated art is absolutely wild in every way

After reading Andy Baio's latest post about AI-art generators and artist rights, I reached out and had him walk me through how to experience it myself. We followed the instructions in this video:

How you go about it

It was about an hour of tinkering before about another hour of processing before I was at a console making new artworks.

It involves the following steps:

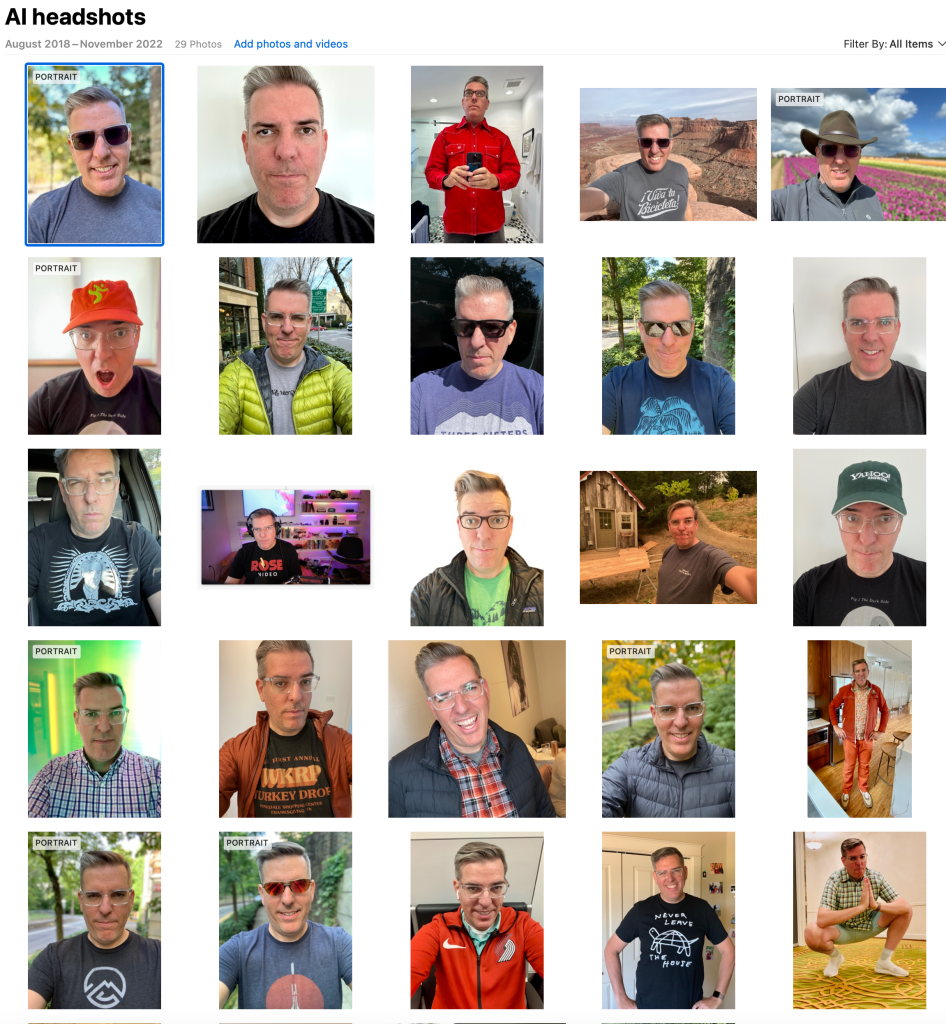

- Pick out 20-30 portraits of yourself, export them all to a directory

- Use Birme to cut them all up into 512px squares (you get to adjust the crop on them in a browser)

- Export the images to a new directory on your desktop, then rename them all with a unique identifier and a number (for me, I did my full name with no vowels then the AI art engine I used so the images were nonsense named like this

mtthghydrmbth_1.jpg, etc) - Make a copy of this Dreambooth Collab Doc on Google Drive

- Buy $9.99 of credit on Google Collab to get CPU/GPU time, make sure you use a Google Chrome browser

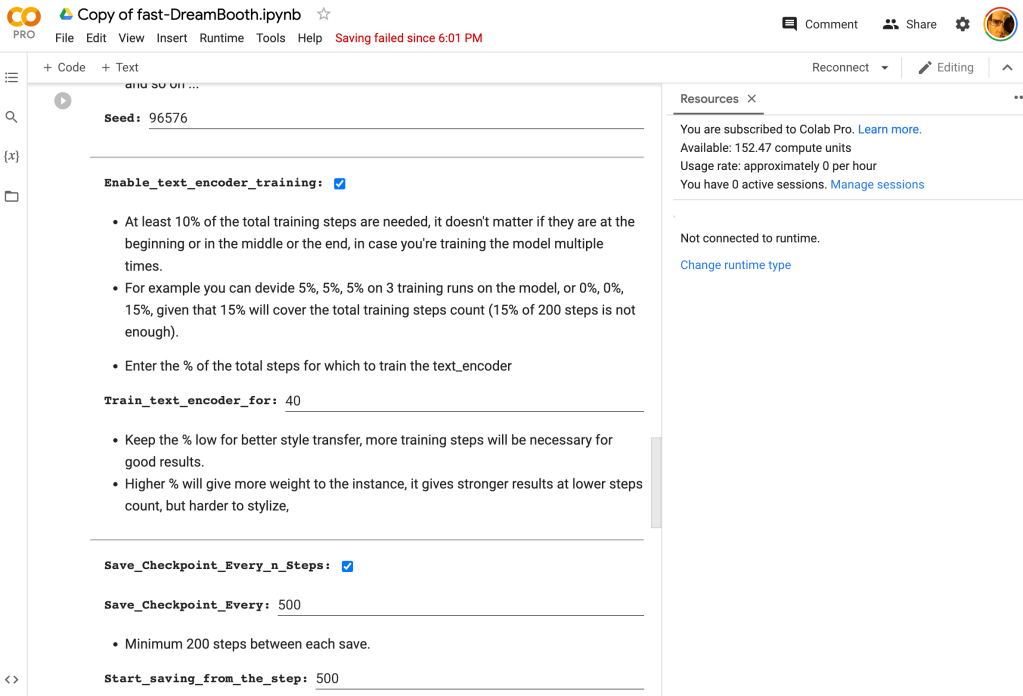

- Spin up a new instance in Google Collab and start going step by step through the Collab doc (this is super geeky, super confusing, but they're Python code chunks that run)

- After you connect to your own Google Drive, and download the model and upload your images and then train it, it'll churn for about an hour

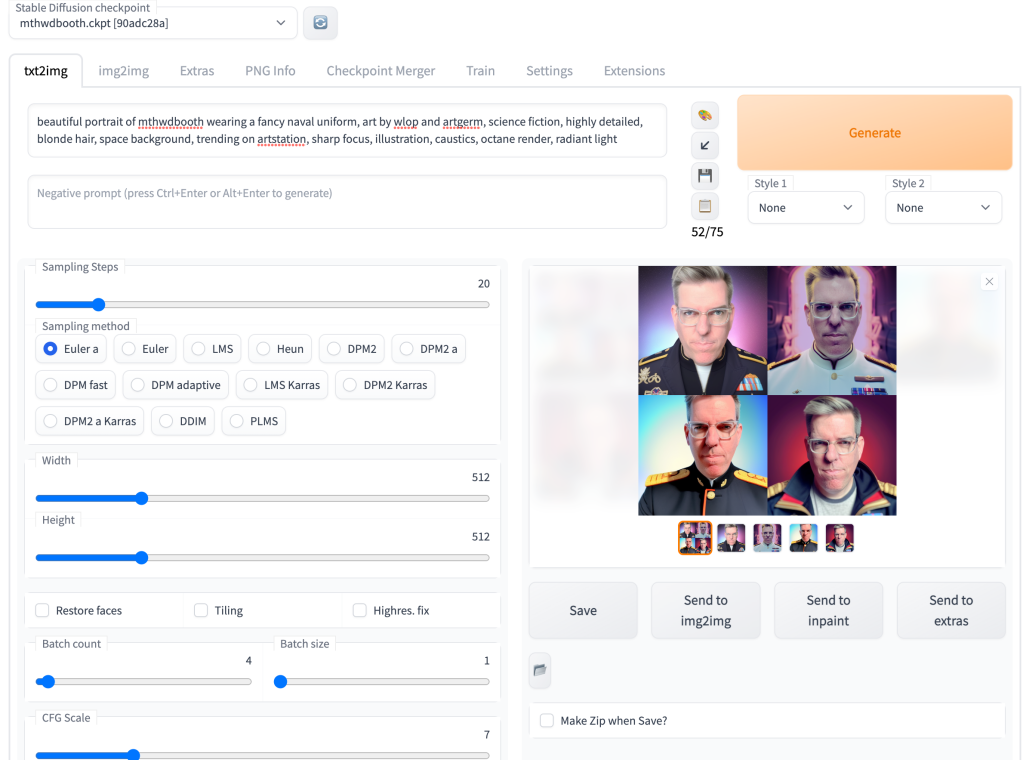

- Once complete, you can run queries directly against your training set with prompts.

- I used Lexica to find prompts. You simply click any image you like and want to mimic, copy the prompt and change the subject name to your own custom name you set in the Collab doc that matches your image names.

Here are the images I used as the source to train the model, just a bunch of random photos of me over the years.

Here's what the UI looks like in Collab as you're setting everything up.

And here's the Stable Diffusion console for sending prompts to it.

Everything you generate will export out as 512px jpegs in a directory in Google Drive, and each render took about 10 seconds to create 4 different versions of a prompt.

Last night I spent about two hours sending prompts with some direction from Andy. For some subject matter there are tons of reference images so it's easy to have a computer deep fake your face onto a Marvel character because there are 1000s of photos from every angle taken of Captain America.

The output

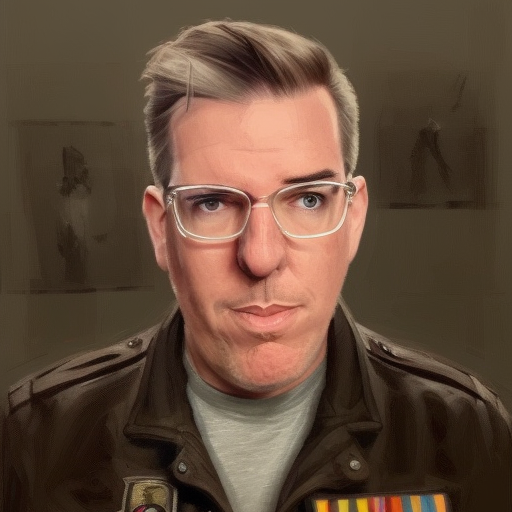

In total, this cost me about $3 in Google Collab compute time to produce over 200 images from 70-80 prompts over the course of a couple hours. I never posed for any photo, all the illustrations and photos here were generated from the model. You can view a larger set of them on Flickr in this album. I removed the less successful ones where I had 15 fingers or three rows of teeth, and a lot of the cartoon prompts I tried ended up in total nonsense. A lot of prompts were about Simpsons, Star Wars, Spiderman, captain America, old posters, and various art styles.

So obviously, this is fascinating and kind of incredible and of course a little bit scary.

Now what?

My first thought after seeing the output here is the obvious ethical problems around illustrators working today. At Slack, we once paid an artist to make sketches of our faces for author bios and we had the same artist do one for every writer so we had some cohesion. I believe we paid about $500-1,000 per portrait and I still use mine to this day sometimes.

They were done by a thoughtful, skilled artist and the final product came out amazing. Of the 200 or so things I did last night, about half of them are impressive, and about 25% are kind of amazing, while 50% have loads of errors, but no one is going to ignore one way costs hundreds of dollars and takes a couple weeks while the other method takes a few seconds from a handful of minutes of tinkering for a dollar or two.

The question of what do I even do with all this output is the next obvious question. These aren't real photos or paintings and never were, they're just generated from the ether and feel dishonest to use in any venue beyond pure experimentation or if posted somewhere, clearly labeled as such.

Some of these make me look way better and I could see people using their own better versions of themselves on dating sites and where is the line between use a bit of Facetune to look your best versus posting a completely generated doctored photo that makes you look 20 years younger?

What's next

We're in the early days and things in this realm are moving FAST—as Andy pointed out in his post, just a few months ago the models were not producing convincing images but now they are sometimes so accurate they're scary. I've already seen dozens of startups and apps pop up that make this entire process easier, and I'm sure progress will continue to the point in the next few months where you just pay a site ten or twenty bucks and upload photos of you and you'll get a point-and-click interface to generate images of you in any sort of costume or movies or environments you desire.

It's a very weird wild world right now, and there are so many ethical and legal questions around it that it's hard to keep up, especially when the underlying tech is shifting so quickly beneath our feet.

In these experiments, one thing became clear to me: AI generated art is going to become a very big question for us all to grapple with and all the industries that will be affected by it.

Update: I continue to play with this, and here are a few more I've made recently

Subscribe to our newsletter.

Be the first to know - subscribe today