Part 3: Talkabot conference liveblog

I spent last week in Austin, Texas attending the first Talkabot Conference. The first post was the tutorial day, the next covered the first day of talks. Today is the conclusion, with the last day of the conference notes.

“Day one was sweet, where day two is more savory”- Ben Brown’s opening remarks

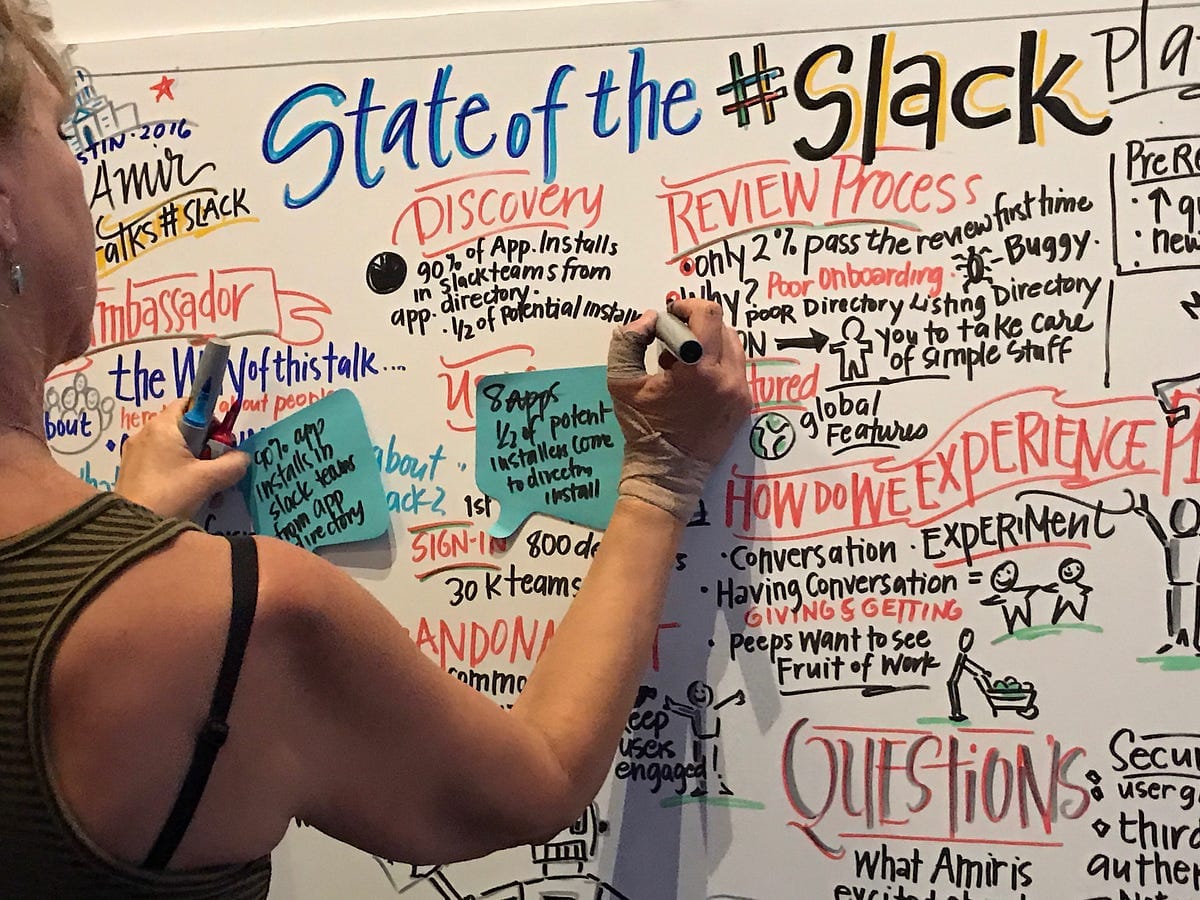

Using Location Data with Marsbot from Foursquare

The final day of Talkabot opened with a talk from the team at Foursquare about their newest project Marsbot.

“Most people only use 3 apps per day, almost everyone uses a messaging app as one of those three, so bots are good for growth and getting into people’s lives, and getting used by them every day.”

“Before you build a bot, good to ask yourself What are people going to get out of my chatbot? Will user remember to use it when they need it, will they get annoyed if the bot is constantly messaging to remind the user? It’s easy to be inundated if a zillion bots are antagonizing you all day in your messaging apps, and that’s not what we’re shooting for.”

Marsbot is a context bot, not a chat bot, it knows where you are standing, can learn what you like and suggest things to you.

Marsbot is SMS-powered. It leverages existing tech and data, location awareness, plus shape data of locations. It uses sentiment analysis and ratings. It has timeliness and seasonality to its predictions and suggestions.

They finish up with a live Marsbot demo. It uses an app to get location, but SMS to respond. You can tailor your prefs on the app. It does a personal introduction to make it feel more personal. SMS lets users search with simple words. Search “hamburger”, it gives results that you can refine with a message saying “closer”, it gives results closer to you then another message saying “cheaper”, and it shows the cheapest close by places to get a burger.

The unique angle on Marsbot is that Foursquare built a bot based around their huge existing dataset and service. Most bots we hear about are attached to “new” services without a decade of data behind them. It was interesting to see how they carved a text-based service of just a few key features from their archives of data.

Rob Guilfoyle, Abe.ai

Abe is a bot that talks about money, for Slack. It’s a financial wellness tool for small teams, that lets you plan and track your spending. It can call up live, real financial planners whenever you hit a sticky situation. You add it to Slack then connect to accounts using aggregators, a lot like Mint. You can run statistics against your history. It’s more push than pull, giving you Friday reports of how your accounts are looking and what bills are coming up based on your history, instead of waiting for you to ask questions about money. Abe creates personalized spending plans.

If you ask for a financial pro, you get a real human to “hijack” or “handshake” and will take over the Abe bot and write new messages to you in Slack. It’s handled via sessions and middleware, and they built their own interface to posting to Slack, with specific info about the person requesting help.

Pretty interesting bot/service I hadn’t heard of, and they seem to handle sensitive data well (they never post in channels, everything is in private DMs).

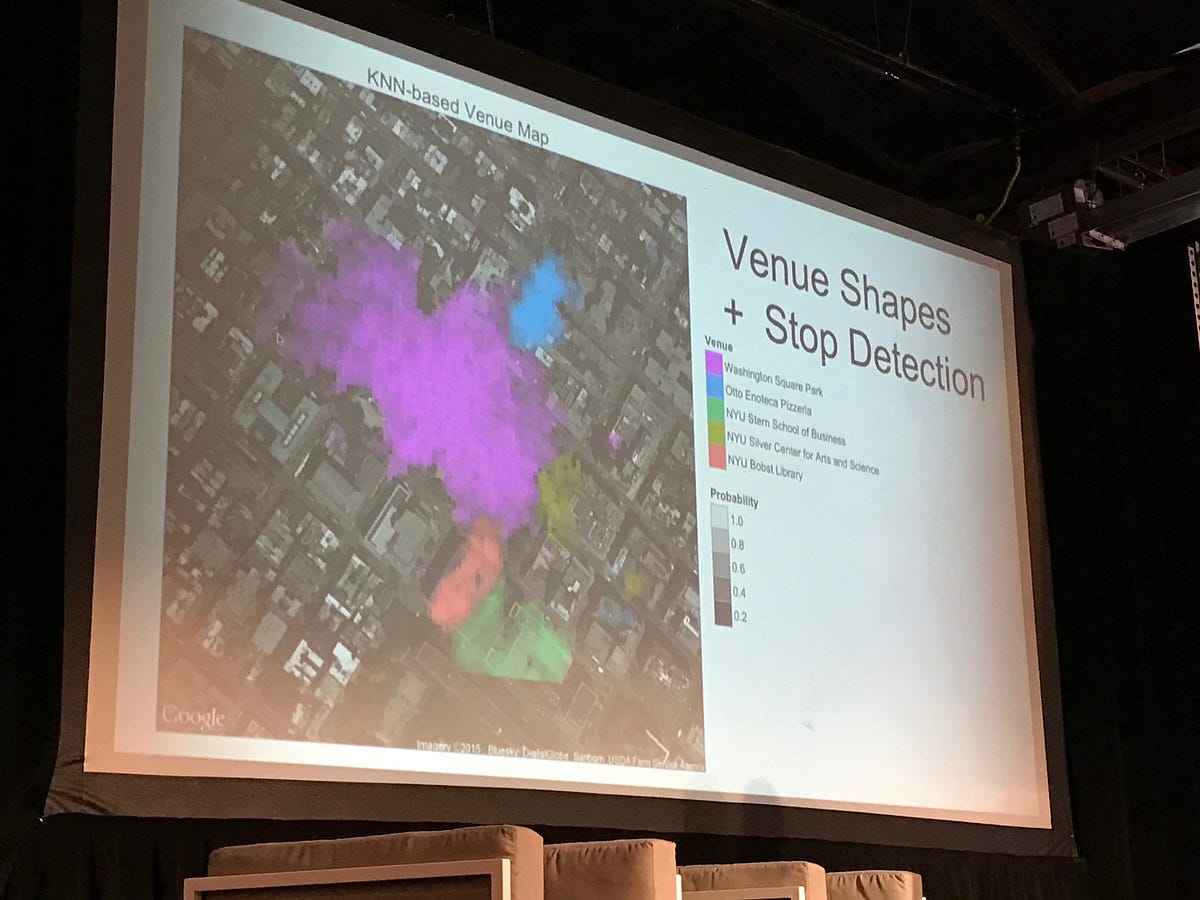

Andy Mauro, Voice Recognition at automat.ai

Andy presented his “lessons from 15 years of speech recognition work” starting with phone interactions he programmed over 16 years ago. This was a barn-burner of a talk

He started his career at Nuance, in the year 2000 building a voice assistant. Other landmarks in the industry: In April 2007, Google launched a phone-based Google called Goog-411 which lead to Google Now, and Google Assist. Siri used Nuance, in 2010 as a startup, until Apple bought the Siri tech in 2011. Tellme launched in 1999, was acquired by Microsoft in 2007, and eventually became Cortana.

He plays an automated voicemail tree from UPS, from 2005. It’s terrible and annoying. Lessons from that work: Brand matters, so use a brand voice, establish conversational UIs, all GUIs should express your brand (brand is not the same as persona). Pithiness is key, guide users but don’t inundate them, shorten your messages as much as possible.

“Having a bot that offers up pre-loaded buttons plus allows open Natural Language entry works best, since this lets you offer search and browse patterns that users naturally fall into. Messaging patterns should be similar to the web, how people start with a search, then browse/burrow for exactly what they want. Same should happen with your bot conversations.”

“Getting responses that are “off script” is the norm, since users will type pretty much anything, anywhere in ways it is impossible to always predict. How does your app handle it? It can’t all be canned messages. Routing users to answers reduces frustration, get people close to their destination through menus and options. Error handling hurts, have one level of error handling handled by bots, but then escalate anything beyond that instantly to a human that can interact with them to fix the problem quickly.”

“Before you build a customer service bot: if you can’t be better than a live person, don’t deploy it. Voice assistants are generalists, but bots are experts/specialists, so expectations between each are different. Siri and Cortana and Alexa are all generalists, but bots are specialized and do one thing really well.”

“People hate a blinking cursor. Give people direction or start them off with sample questions but also let them search, you need to offer both.”

“Context is great and a hard programming problem. Manage the context of a thread to let people jump around in deep searches and still know what context/options you’ve already asked about. If your bot determined they were looking for Mexican food in SF, but then they change to Italian food, don’t ask for their location all over again, try and maintain context from earlier conversations, stay deep in the tree.”

“Inertia is big: People won’t change from a phone or the web to a bot even if it’s faster, because they’re already used to how they do things currently. Your bot has to be different and better. People will just maintain on the old system unless the experience is both different and better.”

“Voice assistants are hard and expensive, bots are self-service, must be so easy it is self-explanatory. AI needs to make it easier as it learns and gets smarter. Bots are just messaging plus a bit of AI.”

His wishlist for messaging platform bots and APIs

- Bots should initiate sometimes, should say hi, not just respond to the user talking to it.

- Ghost text should be configurable. Whatever your app’s default “type a message” prompt is should be in your platform API. If you can control the prompt programmatically, you could change during app flows, ask prompts with context and save a few steps.

- Platform parity: innovate on use cases not on platform translation. Slack bots should be different than Kik bots. But it would be nice if Slack had carousels like Kik, and Messenger’s suggested responses worked like Slack’s buttons, etc.

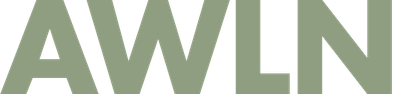

Amir Shevat, State of the Slack Platform

Amir starts by sharing loads of stats about Slack for developers.

Platform

- more than 90% of paid teams use bots (doesn’t include GIPHY)

- average of 8 bots and apps on paid Slack teams

- 17k integrations installed 3.6M times

- Lessons: useful apps do better than fun ones, and bots are only as good as the service they expose.

Discovery

- more than 90% of app installs are from the Slack App Directory

- half of the potential installers in the app directory end up installing

- Lessons: Medium and Product Hunt are good for getting the word out, business-use cases perform better than anything else.

Usage

- about half of apps are retained in first 7 days

- sign in with slack used by 800 developers and installed on 17k teams

- The new Events API has been used by more than 400 devs

- Lessons: abandonment is more common than uninstalls. Onboarding is key for usage, spammy apps get uninstalled, no onboarding leads to abandonment, ask installing user to help distribution, ask them to add it to #general and tell other members about it, releasing new features tends to get more installs.

Review/Featuring

- only 2% of apps pass review the first time.

- top 98% problems: poor onboarding, buggy functionality, no install page, no support page, no privacy policy, poor descriptions, requesting too many scopes, spamming users in DM or by email

- Lessons: app review could take days, but usually takes a couple weeks, featured apps are high quality apps using new features, global services making an app, and people that ask Slack to feature them.

Second half of Amir’s talk is more philosophical. He asks: How do we experience products?

“We know how to design conversations, for hundreds of thousands of years, vs. building apps in the last few years. Bots are about having a good conversation.”

He does a presentation experiment. We can sense awkwardness in conversations when we say them out loud vs. written down. He asks a member of the audience to come up, and they say hi, then he asks for the guy’s facebook login. The volunteer freezes, then asks why. Amir was showing how awkward many app interactions are, that you should give users a reason why, never ask for information before giving information out. People will give you a lot of information if they get a lot out of the interaction. Ask them but tell users what they’ll get in return, equals more success.

API.ai with Ilya Gelfenbeyn, building bots that understand users

After a brief intro, he goes through a demo of API.ai. He creates a bot, builds intents, builds a “play music” demo from text interactions. He adds about four example phrases that should initiate music playback based on what users write. He enters another phrase off-script and the API susses out that they meant to play a song, showing off how API.ai figures out meaning and context.

It currently supports integrations for many popular chat systems, and you can test your apps on a custom page. There’s a training feature. You can upload your old logs to train system, or look at currently logged failures, and teach the AI by answering things live.

Their big clients: Abe.ai and Statsbot for Slack uses them. Techcrunch and Forbes both have bots that use their backend too.

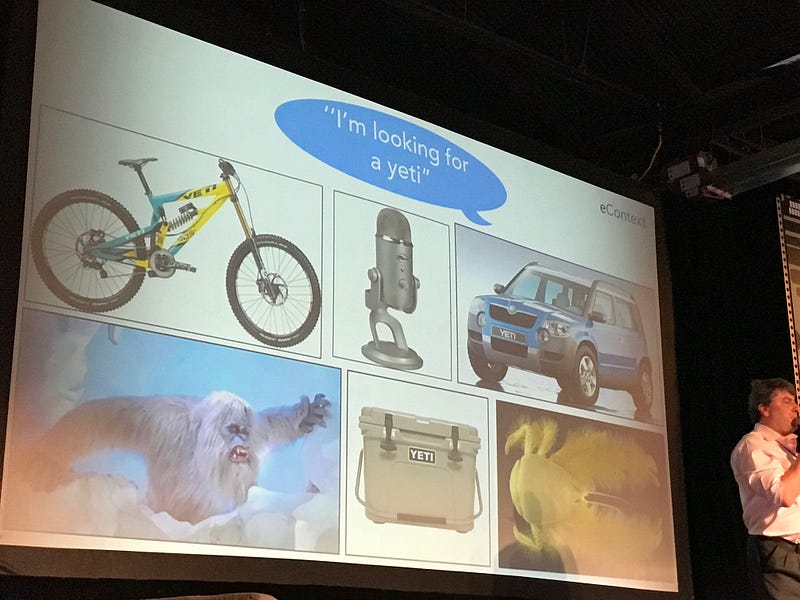

Stephen Scarr, CEO eContext, Smarter Bots through Semantic Classification

Stephen talked about his history in building search engine backends, how they invented word clouds in 2008. There’s no magic fairy AI for bots yet, but his company has a giant corpus of data from language and search topics, and is one of the “world’s most diverse accurate and structured english language knowledge bases suitable for multiple AI applications.”

In 6–8 weeks botbuilder.com will launch and have tools of theirs to build your bot after uploading products/descriptions.

They work with topic co-occurrence, and general taxonomy. How people search about one thing, but talk about another. He shows a demo of their AI and how it can figure out the context of the word “yeti” which depending on context exists in many different worlds.

Their early tests were against the Walmart API, and they can match products in Walmart.com to any free text phrases you send their bot. They’ve written a whitepaper on bots: https://econtext.com/virtual-agents-whitepaper

Sarah Wulfeck & Lucas Ives designers of talking barbie, PullString

“Computer conversations” is what they work on, and computer conversations have hit an inflection point, where computing power is far enough along that we can do really interesting things with text or spoken inputs.

“Conversing with a user is like doing improv. You can script your app’s side of it, but you have no idea what the user will say and need to react, and build apps that can deal with improv speech.”

Bots need to design for off-script situations, figure out what to do with horrible speech, interactions, etc.

Projects: Hello Barbie is a bot. They wrote over 8,000 lines of dialogue, she can talk for 30hrs without repeating a phrase. Can keep track of the names of your pets, siblings, know what your interests are, etc. They had to build a conversational neural network for Barbie.

Jessie Humani is a millenial bot on the Facebook Messenger store, more of a medium-sized project, 3,000 lines of dialogue. Finally, their Call of Duty ARG bot was a small-sized project at only 400 lines of dialogue around an event but became bigger than another social marketing campaign ever done by Activision.

They’re launching PullString as a platform for building bots. There’s a CMS for authoring scripts, an AI engine, and their own APIs.

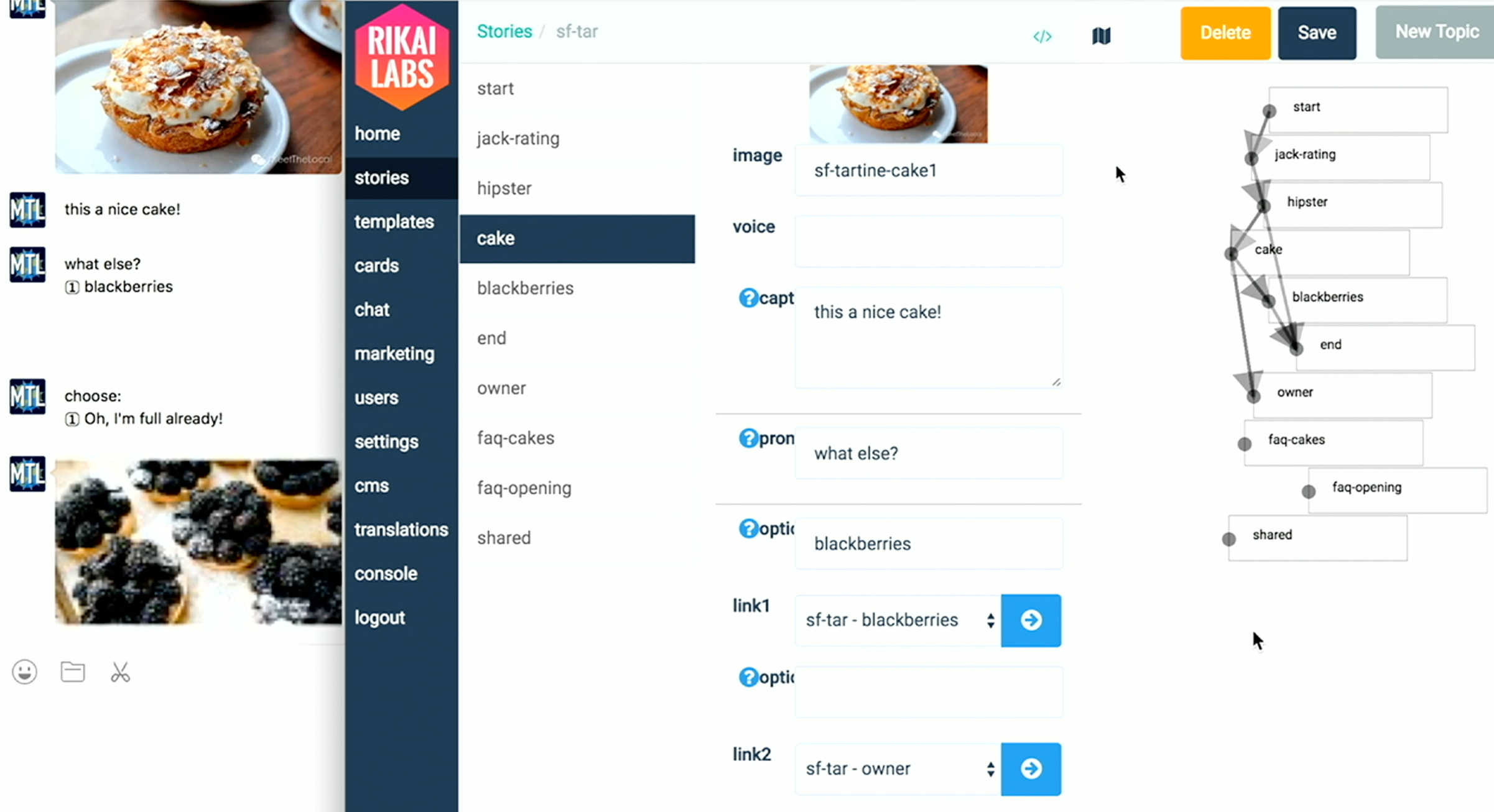

DC Collier learning from WeChat

DC has built WeChat bots to teach people to read and speak English. You can converse with a teacher that you pay, mostly automated lessons. Live teachers can help train their AI to automate answers. They acquire new users through letting people share their “language certificates” to their moments and those have a QR code to link users back to the bot.

Chat applications on WeChat can have chat, audio and video, you can write mini articles like a blog or carousel of cards, there’s an embedded web browser, WeChat moments act as a newsfeed, and accepting payments is completely seamless inside WeChat.

They’ve built their own CMS at Rikai to script conversations.

Tips on launching a bot on WeChat:

- Need an official account, must operate as a business in China

- Then you’ll get access to WeChat APIs

- WeChat has their own NLP API, converts voice to chinese text

- using WeChat API is difficult, putting content into their network requires a myriad of keys and approvals and can take days.

- “Red envelopes” are peer-to-peer payments in the app

- There are coupons, live customer support, survey tools, QR codes are popular, and a vibrant 3rd party ecosystem.

- Marketing can be done through WeChat “stars” like Instagram famous users that can mention your product via QR code.

Mitch Mason, IBM Watson: from the show Jeopardy to the future

History of IBM’s Watson. At the start, they worked for three years on a computer that was huge and noisy and eventually beat Ken Jennings on Jeopardy. Next, they shrank it to a box, then to the cloud. Started with healthcare and insurance. To reach a wider audience, they made them easier to use for any developer.

IBM Watson language analysis as a service on bluemix is most useful to bot authors. They have a suite of APIs and options for bot builders, have deployed to Facebook Messenger and Slack using various bridges. More is coming soon.

Robert Hoffer, Smarter Child creator

ActiveBuddy launch video from 2001 plays and it was amazing in the sense of being a time capsule. Robert describes how bots go way back. One instance is from 1987, when Apple made a “Knowledge Navigator” talking bot that worked on a Mac. He launched ActiveBuddy in 2000, which became first commercial bot. Lived on Yahoo, ICQ, Yahoo IM, and MSN. Sold to MSFT, who eventually retired it in 2009.

Robert asks why we keep returning to bots? We keep forgetting technology, as it operates in cycles and people forget what came before. Hollywood is fascinated by AI. Stories going back hundreds of years are about people obsessed with making stuff come to life, so it’s a big part of our culture.

Robert says the key differentiating feature of bots is their speed. The web was slow in the early 2000s, but the Smarter Child bot could answer you instantly.

My thoughts, post-event

Talkabot was a great gathering of people that had largely done work on their own, and it felt like the first time bot authors could talk to other bot authors and share experiences and tips on how they solved similar problems.

Everyone mentioned not wanting to reinvent the wheel over and over again, but it did seem that every big company and team had built their own API toolkits, built their own analytics engines, and many people had built content management systems to let non-programmers tweak their bot and app text. Natural language processing still felt new, but there were plenty of people that had worked on similar problems for decades in other contexts.

Like DVD formats or browser wars, people didn’t want to have to re-write their apps for each platform, and platform API translation as a service looks to be desired and a growth opportunity. But on the other hand, each platform had its own context and flavor. Sure, it would be nice to write a simple bot that worked on both Kik and Slack without having to redo code, but an app used by teens on Kik would likely be much different than one used by middle-aged adults in a business context on Slack.

The best talk in my opinion was Andy Mauro’s, where he summed up 15 years of experience building customer service bots in a highly entertaining and opinionated presentation. All the talks taught the audience something but he likely shared had more lessons-per-minute than any other. I’d say Erika Hall’s talk on interaction design was a close second for many of the same reasons: decades of experience boiled into actionable advice delivered with opinion and humor.

Overall, I hope that Talkabot bringing everyone together made them realize that everyone didn’t have to reinvent the wheel, that maybe one analytics package was good enough for everyone and would save the time of building your own, and maybe the various bot building and translation APIs could save developers time while offering their work on more platforms.

I look forward to seeing future editions of Talkabot, I think it’ll quickly become a vital event for companies and programmers to gather periodically to share their work with one another.

I hope the last three days of my notes posted here were a good approximation of what it was like to be there, in case you missed the event. The Talkabot site should have video from all the presentations soon.

Subscribe to our newsletter.

Be the first to know - subscribe today